Seems to me that some people are still struggling to populate their fact tables using SSIS. When doing it, they come across issues like these:

- Where do I populate my fact table from?

- How do I get the dimension keys to put into my fact table?

- Where can I get the data for the measures columns?

- With what do I populate the snapshot date column?

- What is the primary key of my fact table?

- The source table doesn’t have a primary key of the source table. What should I do?

- I got duplicate rows in the fact table. How do I handle it?

- The rows with that snapshot date already exist. What should I do?

- Lookup cache: should I choose full cache or partial cache?

- I can’t find the row in the dimension table, what should I do?

As always, the best way to explain is by example, which is more effective than answering the above questions. So I’m going to do the following:

- Describe the background on the company and the data warehouse

- Create the source tables and populate them

- Create the dimension tables and populate them

- Create the fact table (empty)

- Build an SSIS package to populate the fact table, step by step

Background

It’s a van hire company called TopHire. It’s purely fictional of course. Their business system (HireBase) captures the rental information, including the customer information. HireBase has a fleet database where all vans are maintained. HireBase contains only 3 tables:

- Customer: contains 100 customers, e.g. name, data of birth, telephone number, etc. A column called CustomerId uniquely identifies a customer.

- Van: contains 20 vans that Top Hire operates. It’s their fleet. Contains the registration number, engine size, van size, colour, year of manufacture. The unique identifier for each van is the registration number.

- Hire: contains 1000 hire transactions since 1st Jan 2011. Every hire transaction stores: who the customer was, which van was rented out, the date it occurred, number of days and various hire fees/charges (the van, sat nav, insurance, damage waiver and total bill). For each transaction we have Hire ID, which is a unique identifier in this table.

The data warehouse contains 4 tables:

- Date dimension: contains every single date from 2006 to 2016.

- Customer dimension: contains 100 customers. To be simple we’ll make it type 1 so we don’t create a new row for each change.

- Van dimension: contains 20 vans. To be simple we’ll make it type 1 so we don’t create a new row for each change.

- Hire fact table: contains 1000 hire transactions since 1st Jan 2011. It is a daily snapshot fact table so that every day we insert 1000 rows into this fact table. So over time we can track the changes of total bill, van charges, satnav income, etc.

Create the source tables and populate them

So now we are going to create the 3 tables in HireBase database: Customer, Van, and Hire. Then we populate them.

First I’ll show you how it looks when it’s done:

Customer table:

Van table:

Hire table:

And here is the script to create and populate them:

-- Create database

create database HireBase

go

use HireBase

go

-- Create customer table

if exists (select * from sys.tables where name = 'Customer')

drop table Customer

go

create table Customer

( CustomerId varchar(20) not null primary key,

CustomerName varchar(30), DateOfBirth date, Town varchar(50),

TelephoneNo varchar(30), DrivingLicenceNo varchar(30), Occupation varchar(30)

)

go

-- Populate Customer

truncate table Customer

go

declare @i int, @si varchar(10), @startdate date

set @i = 1

while @i <= 100

begin

set @si = right('0'+CONVERT(varchar(10), @i),2)

insert into Customer

( CustomerId, CustomerName, DateOfBirth, Town, TelephoneNo, DrivingLicenceNo, Occupation)

values

( 'N'+@si, 'Customer'+@si, DATEADD(d,@i-1,'2000-01-01'), 'Town'+@si, 'Phone'+@si, 'Licence'+@si, 'Occupation'+@si)

set @i = @i + 1

end

go

select * from Customer

-- Create Van table

if exists (select * from sys.tables where name = 'Van')

drop table Van

go

create table Van

( RegNo varchar(10) not null primary key,

Make varchar(30), Model varchar(30), [Year] varchar(4),

Colour varchar(20), CC int, Class varchar(10)

)

go

-- Populate Van table

truncate table Van

go

declare @i int, @si varchar(10)

set @i = 1

while @i <= 20

begin

set @si = convert(varchar, @i)

insert into Van

( RegNo, Make, Model, [Year], Colour, CC, Class)

values

( 'Reg'+@si, 'Make'+@si, 'Model'+@si,

case @i%4 when 0 then 2008 when 1 then 2009 when 2 then 2010 when 3 then 2011 end,

case when @i%5<3 then 'White' else 'Black' end,

case @i%3 when 0 then 2000 when 1 then 2500 when 2 then 3000 end,

case @i%3 when 0 then 'Small' when 1 then 'Medium' when 2 then 'Large' end)

set @i = @i + 1

end

go

select * from Van

-- Create Hire table

if exists (select * from sys.tables where name = 'Hire')

drop table Hire

go

create table Hire

( HireId varchar(10) not null primary key,

HireDate date not null,

CustomerId varchar(20) not null,

RegNo varchar(10), NoOfDays int, VanHire money, SatNavHire money,

Insurance money, DamageWaiver money, TotalBill money

)

go

-- Populate Hire table

truncate table Hire

go

declare @i int, @si varchar(10), @DaysFrom1stJan int, @CustomerId int, @RegNo int, @mi int

set @i = 1

while @i <= 1000

begin

set @si = right('000'+convert(varchar(10), @i),4) -- string of i

set @DaysFrom1stJan = (@i-1)%200 --The Hire Date is derived from i modulo 200

set @CustomerId = (@i-1)%100+1 --The CustomerId is derived from i modulo 100

set @RegNo = (@i-1)%20+1 --The Van RegNo is derived from i modulo 20

set @mi = (@i-1)%3+1 --i modulo 3

insert into Hire (HireId, HireDate, CustomerId, RegNo, NoOfDays, VanHire, SatNavHire, Insurance, DamageWaiver, TotalBill)

values ('H'+@si, DateAdd(d, @DaysFrom1stJan, '2011-01-01'),

left('N0'+CONVERT(varchar(10),@CustomerId),3), 'Reg'+CONVERT(varchar(10), @RegNo),

@mi, @mi*100, @mi*10, @mi*20, @mi*40, @mi*170)

set @i += 1

end

go

select * from Hire

Create the Data Warehouse

So now we are going to create the 3 dimension tables and 1 fact table in the data warehouse: DimDate, DimCustomer, DimVan and FactHire. We are going to populate the 3 dimensions but we’ll leave the fact table empty. The purpose of this article is to show how to populate the fact table using SSIS.

First I’ll show you how it looks when it’s done:

Date Dimension:

Customer Dimension:

Van Dimension:

Hire Fact Table:

And then we do it. This is the script to create and populate those dim and fact tables:

-- Create the data warehouse

create database TopHireDW

go

use TopHireDW

go

-- Create Date Dimension

if exists (select * from sys.tables where name = 'DimDate')

drop table DimDate

go

create table DimDate

( DateKey int not null primary key,

[Year] varchar(7), [Month] varchar(7), [Date] date, DateString varchar(10))

go

-- Populate Date Dimension

truncate table DimDate

go

declare @i int, @Date date, @StartDate date, @EndDate date, @DateKey int,

@DateString varchar(10), @Year varchar(4),

@Month varchar(7), @Date1 varchar(20)

set @StartDate = '2006-01-01'

set @EndDate = '2016-12-31'

set @Date = @StartDate

insert into DimDate (DateKey, [Year], [Month], [Date], DateString)

values (0, 'Unknown', 'Unknown', '0001-01-01', 'Unknown') --The unknown row

while @Date <= @EndDate

begin

set @DateString = convert(varchar(10), @Date, 20)

set @DateKey = convert(int, replace(@DateString,'-',''))

set @Year = left(@DateString,4)

set @Month = left(@DateString, 7)

insert into DimDate (DateKey, [Year], [Month], [Date], DateString)

values (@DateKey, @Year, @Month, @Date, @DateString)

set @Date = dateadd(d, 1, @Date)

end

go

select * from DimDate

-- Create Customer dimension

if exists (select * from sys.tables where name = 'DimCustomer')

drop table DimCustomer

go

create table DimCustomer

( CustomerKey int not null identity(1,1) primary key,

CustomerId varchar(20) not null,

CustomerName varchar(30), DateOfBirth date, Town varchar(50),

TelephoneNo varchar(30), DrivingLicenceNo varchar(30), Occupation varchar(30)

)

go

insert into DimCustomer (CustomerId, CustomerName, DateOfBirth, Town, TelephoneNo,

DrivingLicenceNo, Occupation)

select * from HireBase.dbo.Customer

select * from DimCustomer

-- Create Van dimension

if exists (select * from sys.tables where name = 'DimVan')

drop table DimVan

go

create table DimVan

( VanKey int not null identity(1,1) primary key,

RegNo varchar(10) not null,

Make varchar(30), Model varchar(30), [Year] varchar(4),

Colour varchar(20), CC int, Class varchar(10)

)

go

insert into DimVan (RegNo, Make, Model, [Year], Colour, CC, Class)

select * from HireBase.dbo.Van

go

select * from DimVan

-- Create Hire fact table

if exists (select * from sys.tables where name = 'FactHire')

drop table FactHire

go

create table FactHire

( SnapshotDateKey int not null, --Daily periodic snapshot fact table

HireDateKey int not null, CustomerKey int not null, VanKey int not null, --Dimension Keys

HireId varchar(10) not null, --Degenerate Dimension

NoOfDays int, VanHire money, SatNavHire money,

Insurance money, DamageWaiver money, TotalBill money

)

go

select * from FactHire

Now you can see that the 3 dimensions have been populated. And the fact table is created and empty, ready for us to populate it.

Build the SSIS Package to Populate the Fact Table

First I show you how it looks when it’s done. This is the overall workflow we are going to build:

This is a bit of description on each of the 6 boxes above:

- Read Hire table in HireBase: First we get the original OLTP records from the hire table in HireBase.

- Get Snapshot Date Key: we get today’s date and convert it into an integer as Snapshot Date Key. FactHire is a snapshot fact table. A periodic snapshot fact table. Which means that every day we populate the fact table with 1000 rows like this:Next week, on Monday 28th May, we insert 1000 rows to the fact table, which is all the rows in the Hire table. Then on Tuesday 29th May we insert 1010 rows. Because there would be 10 new hire transactions in the Hire table in HireBase system. The 1000 “old” rows might change as well on Tuesday, not all of them will be the same as Monday. Perhaps there are 10 rows which are modified, the amount/fees changed, etc. Whatever the condition of the Hire table on Tuesday, we are taking all rows in put them into our data warehouse. And we do this every day. We every day, put whatever is in the Hire table into FactHire. That’s what Periodic Snapshot fact table means.Hence in a periodic snapshot fact table we have a “Snapshot Date”. Meaning: the date when we captured the source table. Or, the date when these 1000 rows were inserted into this fact table. The value of this snapshot date is today’s date (the date the ETL runs). The date the load happens.

- Get Customer Key: from Hire table we get the Customer ID which was involved in the hire transaction. We then go to the Customer Dimension to get the Customer Key for this Customer ID.

- Get Van Key: from Hire table we get the Registration Number which was rented out in the transaction. We then go to the Van Dimension to get the Van Key for this Registration Number.

- Get Hire Date Key: from Hire table we get the Hire Date, which is the date when the hire transaction happened. We then go to the Date Dimension to get the Date Key for this Hire Date.

- Populate FactHire: After we get all the required dimension keys, we insert the rows into the FactHire fact table.

Now that we know what to build, let’s build it. The box numbers below refer to the 6 boxes above.

Box 1. Read Hire table in HireBase

We create the data source in the workflow. It’s OLE DB Data Flow Source, from HireBase. We take all columns, so we don’t specify a query:

Box 2. Get Snapshot Date Key

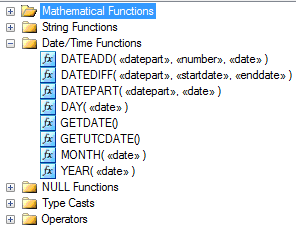

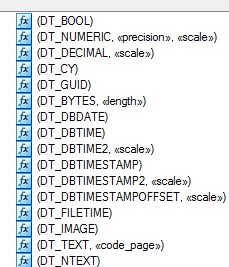

Create a Derived Column and set the Expression to:

(DT_I4)((DT_WSTR,4)YEAR(GETDATE()) + RIGHT(“0” + (DT_WSTR,2)MONTH(GETDATE()),2) + RIGHT(“0” + (DT_WSTR,2)DAY(GETDATE()),2))

See the complete article here.

Box 3. Get Customer Key

Create a Lookup transformation and set to Full Cache, OLE DB connection manager, Fail component, like this:

Full cache: SSIS reads all rows from DimCustomer into memory, then read the Hire table and SSIS doesn’t need to read DimCustomer any more. All lookup operations are done in memory.

Partial cache: SSIS build the memory cache while reading the rows from Hire table. When SSIS reads the rows from Hire table, it tries to find the Customer Key in memory cache. If it can’t find it, it reads the DimCustomer on disk.

No cache: SSIS doesn’t build memory cache. SSIS joins DimCustomer to Hire table (using RDBMS operation) to find the Customer Key.

Cache Connection Manager (CCM): SSIS reads DimCustomer and put in memory cache. This cache can be reused and shared between several Lookups, and across several dataflows. CCM reduces database workload.

OLE DB connection manager: a table in the warehouse (in this case it’s DimCustomer), see Connection pane below:

In the Columns pane, connect Customer Id from the Hire table to the Customer Id on the DimCustomer table, and bring the CustomerKey as output:

Box 4: Get Van Key

It’s the same as Get Customer Key above, i.e. using Lookup transform. When connecting [Get Customer Key] and [Get Van Key] choose Lookup Match Output. I’ll come back for Lookup No Match Output at the end of the article. I just want to get this up and running first. We should always get a simple one first up and running, then build up from there.

Box 5. Get Hire Date Key

It’s the same as Get Customer Key above.

Box 6. Populate FactHire

It’s OLE DB destination. On the Connection Manager pane, specify FactHire as the target table, and leave everything as is:

On the Mapping pane, the source column has the same name as the destination column, except HireDateKey:

That’s it. Run it and all should be green, inserting 1000 rows into the target fact table:

And the FactHire fact table now looks like this:

The 4 dimension key columns are populated with the surrogate key from the respective dimension.

Wrapping Up

The above workflow works but there are 2 things we need to do:

- The no match rows

If in the Hire table we have a CustomerId which does not exist in the Customer Dimension, we need to set the CustomerKey to 0.

- Delete fact rows, if exists

If today’s rows exists in the target fact table, delete them first.

As this is long enough. I’m going to put it as a separate article (link).

Hope this helps,

Vincent Rainardi

26/10/12: Data type error when mapping HireDate column to Date column

On Box 5 Get Hire Date Key, on the Lookup Transformation editor, on the Columns pane (see below), when we try to map HireDate column from Available Input Columns to the Date column on Available Lookup Columns, we get an error message: Cannot map the input column, ‘HireDate’, to the lookup column, ‘Date’, because the data types do not match:

Thank you to Masu for pointing this out.

This is because on the Hire table (the input), the HireDate column is in date data type, whereas in the DimDate table, the Date column is in varchar(10).

To solve this issue, in the Get Hire Date Key task, in the Connection pane, instead of “Use a table or a view”, select “Use results of an SQL query” and type:

select DateKey, Year, Month, convert(date, Date) from DimDate where date <> ‘Unknown’

Because both are now of data type Date, in the column pane you won’t have any issue connecting HireDate to Date: