Yesterday someone asked me what I would include in an “introduction to data warehousing” (including data mart). I get asked that question a lot over the years. This is because I wrote a book on data warehousing, and a blog too. People contacted me through my blog and asked “I want to learn data warehousing, what should I study?” In 2008 to 2010 the answer was not difficult: “Just read my book”. But today, in 2022, there has been so much change in data warehousing that what I wrote in 2007 is no longer relevant. It still provides the basics, but there are so many new things going on.

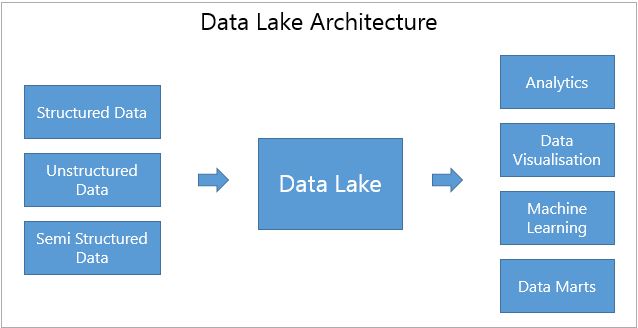

For a start, the architecture is now different. See the architecture for a modern data warehouse here: link. Then we have a data lake. See the architecture of data lake + data warehouse here: link. And now we have a Data Lakehouse, a data lake which has data warehouse features: link.

So what would I write or say to someone who is a completely new in data warehousing and want to learn about it? Do I say “Don’t learn it”? I thought about this answer for a long time actually. Is data warehousing no longer relevant in 2022? Has it been completely replaced by data lakes? Can it still be useful in the era of AI / machine learning?

Still Relevant

After considering many factors and weighing up everything I am now sure that the answer is “It is still relevant”. You still need to learn data warehousing. Why is that? For a start, a data warehouse integrates data from many different sources. Does a data lake do that? No. A data lake only put them into one place but does not integrate them. Dimensional model is the right way to integrate customers, to integrate products, to integrate sales from multiple sources (those who have experienced it will understand this). An MDM integrates the master data, but not the transaction data. You need a DW/mart to integrate both.

Secondly, a data warehouse is easy to use. It uses a star schema which provides the granularity at the base level, and yet it is easily rolled up to higher levels. And you can do this roll up on any attribute. It has developed over 20 years, it’s mature and has been implemented on all use cases, thanks to Bill Inmon, Ralph Kimball, Margy Ross, Bob Becker, Joy Mundy, Warren Thornthwaite, Chris Adamson, Nicholas Galemmo, Claudia Imhoff, and thousands of other dimensional practitioners, see my Who’s Who in Data Warehousing here, and all data warehousing authors here.

In hundreds of thousands of companies throughout the world, big and small, data warehouse is the back end of millions of reports and analytics. It supports the business intelligence and now machine learning too. You need to have a working knowledge of data warehousing to work in the reporting team in these companies (almost every company has one). Not just modelling techniques, but also loading techniques. And reporting techniques. And infrastructure knowledge too i.e. database, security, DevOps.

Replaced By Data Lakehouse?

Is it true that data warehousing can be replaced by Data Lakehouse? I said that here. But I was wrong. Yes a data lakehouse has ACID properties. But that does not make it a star schema. It only makes it a database with lots of disconnected tables. Can you join the tables? Of course you can, but it is not as slick as a star schema. And no where near as integrated (e.g. customers, products, securities). Those who have tried different approaches in data integration will appreciate the power of dimensional approach.

Can we build a dimensional model in a data lakehouse? Of course we can, but it is not the right platform. It is far better to build the dimensional model on a relational database. Because physically a data lakehouse is just a collection of files, the reason to put a SQL layer on the top is to enable us to access the files easily. But a data lakehouse was not designed to do join, indexing, filtering, etc. that a dimensional model requires. Dimensional model was designed and born in a relational database platform, which supports it very well.

A Data Lakehouse can’t replace a dimensional data warehouse. Because it doesn’t have integration capability and it’s not ease to use/query. If what you need is to put data from different systems in one place, then yes use a Data Lakehouse (which is better than a data lake, see here). But the data will be disintegrated. You won’t have a single customer table. Or a single product table. There won’t be conversion between different measures (say sales from different systems) to integrate them into one table.

Reporting is all about integrating data from various data sources. If you only have one data source, then you can just report from that single source directly. It is because your data is in different places that you need a data warehouse. A data lake or a data lakehouse does not “stitch” the data. Inside a data lake/lakehouse the data is still disintegrated.

Please read James Serra article on this point here: link. I completely agree with James that for small amount of data (say 10 tables), or if the users are all data scientists, or to build a POC or to get a quick win with a report/dashboard, then you can use a Data Lakehouse.

Don’t worry if you don’t know what a Lakehouse is, many people in data warehousing and BI don’t. For that I would recommend you to read the CIDR 2021 paper by Ambrust et al: link. This paper explains the Lakehouse architecture and how it addresses some of the data warehousing challenges.

What to study in data warehousing?

So, these days, what does one need to study in data warehousing? (I include data mart)

First of all, they need to understand the problem, preferably illustrated using several cases. The problem of difficulty to report or analyse data. Because the data needs to be transformed, because the data is not conformant to one another, because the data is located in different places. They need to understand the purpose of the whole exercise, i.e. data warehouse/mart makes reporting seamless and easy because the data is integrated and conformed. That the purpose is to enable users to do analysis and reporting.

They can call it BI, AI, or whatever, but they must realise that it is only possible because all the data they need is integrated in one place. Yes a BI system can connect to US, Japan, France databases to get the sales figures, but that is not integration. They have to realise the FX rates, one data is quarterly while the other is daily or monthly, different data uses different product codes, and different hierarchies. How do you integrate them? They must appreciate what a data warehouse (and all the analysts, developers, testers behind it) going through to integrate the sales data worldwide, the AUM data worldwide, the customer data worldwide, the financial data worldwide. Not “all the data in one place”. But: “all the data integrated in one place”. The difference between the two is not one little word. The difference my friends, is a million dollar and 2 years of hard work. It’s equally important to understand, that if the client is small enough and lucky enough to have just one currency, one office and one system, then they don’t need a data warehouse. Report directly from that system. Because of their size, there is no way to justify a million dollar spending. Or having 5-10 people in IT working on this for 2 years. If the student understand this perspective, they are in the right track.

Second, they need to understand why we need to use dimensional modelling. Why not use relational models, or transactional reporting. Why not use a data vault or data mesh. Why not use data lake or data lakehouse. They need to understand which architecture is suitable for which cases. If you just want to analyse Twitter big data, then there is no need to build a DW or mart. Forget dimensional modelling. You need to use a data lake.

Until they are able to answer “which cases are suitable for data warehousing and which are not” the learner should not move to the next step. It is better to understand the why and not understanding the what, then the opposite. As a learner, ask yourself “why don’t I use a lake or relational?” If you can’t answer this, keep learning this second stage. Keep reading and thinking until you can answer that question.

Third, study the architecture. Start with the architecture of a modern data warehouse (i.e. a lake + a warehouse): link. Carefully look at where ML, BI, IOT (sensors), No SQL, MDM, DW and marts are positioned in this architecture. Then study dimensional modelling concepts: link. This is a vast topic and you will need at least 3 months to understand all the details. So please don’t get trapped into the details. Instead, keep it to the basic, which you can learn in a week. And as beginner, limit yourself to only what Kimball wrote (the Kimball Group, not just Ralph Kimball). I can’t say this enough: avoid the advanced stuff and keep it to the basics, i.e. fact tables, dimension tables, SCD, hierarchy and that’s it.

After studying the basics of dimensional modelling, ask yourself: why use star schema? What is the advantage compared to other models? (i.e. snowflake, relational, data vault, ODS) Until you can answer this question you shouldn’t move on to the next step. There is no point understanding the what, if you don’t understand the why. Keep reading until you can answer that question: why do you have to arrange the data in dim and facts, why not relational model, snowflake model, data vault model, or ODS model?

So those are the first 3 things to learn in data warehousing: the purpose, the architecture, and dimensional modelling.

How DW is used and populated

After those first 3 things, the next stage is to understand how a DW/mart is used: BI, reporting & analytics. Not how to get the data out of the DW/mart, but how the reports will be used. Many people are against this. They feel as people in IT don’t need to know the business. On the contrary, we absolutely need to know the business: what the data will be used for. The whole of the design is based on how it will be used. So if you don’t know the business your DW will be poorly designed, and your reports/dashboard too.

15 years ago BI & reporting are almost the whole purpose of data warehousing. How about today, are they still relevant? Does one still need to learn BI & reporting tools such as Power BI, Tableau and Looker? Yes, today BI is still a major usage of a data warehouse. And yes one still need to learn BI/reporting tools. But, I would argue that, considering there are so many things to learn in modern DW, BI/reporting tools can be considered a separate skills to master. Ditto ML (AI), more and more DW is used for ML, does it make ML as one of the subject that one needs to learn in DW? No, it is a separate subject. So when one learn data warehousing, I would recommend not to learn BI tools and ML. Finish learning DW first (see below), then do BI or ML (not both, but pick only one because each is a very large subject to learn).

Then you need to understand about various different techniques of data loading: ETL, ELT, ingestion, ETLTL, streaming, (small) batching, and so on. Knowing the model is one thing, but loading data into that model is a completely different thing. There are various different tricks and methods that we need to know. Staging area, data quality, alert, monitoring, error handling – all these need to be built.

A modern data warehouse

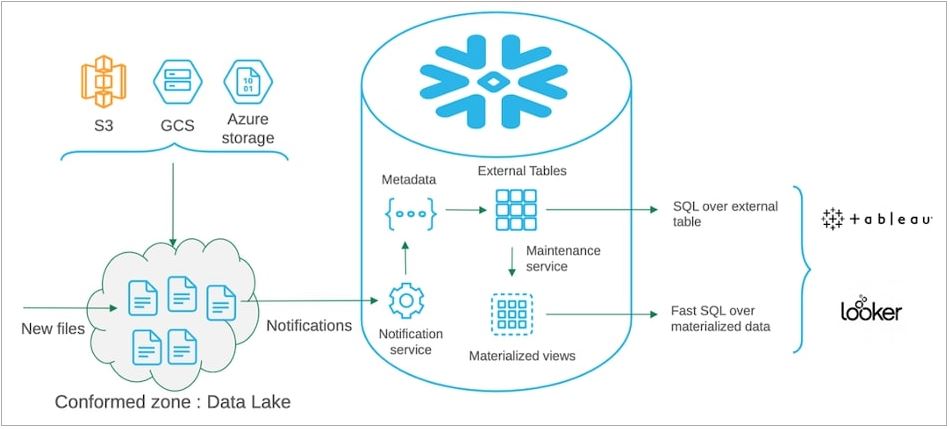

In a Modern Data Warehouse, we have a lake and a warehouse (link). So not only you need to know how to load data into the dimensional model, but also how to ingest data into the data lake (preferably data lakehouse, along with loading the metadata too), and how to get the data out of the lake to the warehouse. So it is critical that you know the in-and-out of data lakes: ADLS2, ADF pipelines, Synapse, Databricks, Python notebooks, Spark, Linked Service, Key Vault, API, storage account, resource groups (if you use Azure, otherwise the corresponding items in Redshift or Google Query). This is where an old school DW practitioner usually got stuck: a modern DW demands the cloud technology, and they are stuck in the old SQL world. They are not flexible enough (or humble enough) to adapt and learn the cloud and the data lake technologies.

A modern data warehouse can also have machine learning, streaming data and master data management. Machine Learning is so popular these days, every company is using it. It’s no longer about BI, but it’s about AI. So you need to know how those data scientists are using the data for making predictions, which is completely different to BI and reporting. Streaming data like social media feeds, IOT feeds (Internet of Things/sensors), and audio visual streams are quite common these days (yes, even in banking). For this we use Kafka or Azure Stream Analytics (or Kinesis/Flink in AWS). Data mastering is usually sometimes outside a modern DW, but sometimes is included in the ecosystem.

Data Governance & DevOps

A DW ecosystem must always be accompanied with a data governance program. Yes, the data quality process is built-in (into the modern DW ecosystem), but Data Governance is completely different. Data Governance includes data definition, data ownership, data mastering, data lineage, and data quality. Whether you are doing a lake, a DW, an ODS, a Data Vault or even a transaction system database, you need to understand Data Governance. Otherwise what you are doing doesn’t make sense. There is no point creating a data warehouse if we don’t understand the principles of Data Governance.

A data warehouse without Data Governance may be able to deliver in the short term, but not in the long term. So we need to know it. Data Governance it a vast world with a myriad of tools, so as a beginner you will need to limit yourself to just the essentials. Just try to understand the concepts, and forget about the tools and how to use each of them.

Any IT development requires DevOps, and data warehousing is no exception. DevOps (development operations) means development processes and production processes. Development processes include coding, automated testing, pull request, branching, release pipelines, as well as the infrastructure such as databases, servers, service accounts, resource groups, storage accounts and networking. Production processes include change control, monitoring data ingestion, setting up alert, error logging, and managing user access. Knowing DevOps would help a lot in data warehousing.

Recap

To recap:

- I want to learn data warehousing, what should I learn?

The purpose, the architecture and dimensional modelling.

Then the business knowledge, BI/reporting and data loading.

Then data governance and DevOps.

- Is learning data warehousing still relevant today?

Yes, learning data warehousing is still relevant today because of its capability to integrate data, it is easy to use and because thousands of companies throughout the world have data warehouses.

- Will data warehousing be replaced by data lake/lakehouse?

No, because a data lake/lakehouse doesn’t have integration capability, it is not easy to use/query, and it is not a suitable platform for dimensional model.

There are business cases which are suitable for data lake (ones which don’t require data integration), and there are business cases which are suitable for dimensional data warehouse. They have different purposes and capabilities. A modern DW includes a data lake.

In the meantime, if you are new in data warehousing, or looking for an inspiration, read the Who’s Who in Data Warehousing: link. Even for an experienced veteran, it is still a good read. You would recognise all the names, which makes it interesting.

If you are a learner, I wish you all the best in your data warehousing journey. Enjoy your learning experience, that’s the most important thing. Not the results. Or achievements. Any questions, just ping me at vrainardi@gmail.com, I’ll be happy to answer them. I now spend half of my time in machine learning, but still work in data warehousing. And still learning data warehousing.